Enterprise-Grade RAG Architectures for Large Language Models: Scalable, Secure, and Production-Ready Designs

- Mark Chomiczewski

- 17 January 2026

- 7 Comments

Most companies think adding a large language model to their workflow is enough to get smart answers. It’s not. Without the right architecture, you’ll get slow responses, hallucinated facts, and security leaks. Enterprise-grade RAG isn’t just a fancy upgrade-it’s the only way to make LLMs reliable, fast, and compliant in real business environments.

What Enterprise RAG Actually Does

Retrieval-Augmented Generation (RAG) fixes the biggest flaw in plain LLMs: they don’t know what happened after their last training cut-off. A model trained in 2023 won’t know about your latest product launch, updated compliance rules, or last quarter’s financials. RAG solves this by pulling in live data from your internal documents, databases, and knowledge bases right before answering a question.

Here’s how it works in practice: When someone asks, "What’s our current policy on remote work?", the system doesn’t guess from memory. It first searches your HR documents for the most relevant sections-maybe a PDF from last month, an updated Slack thread, or a SharePoint page. Then it feeds just those snippets to the LLM, which generates a clear, accurate answer based on real data. No guessing. No outdated info.

Companies using optimized RAG see 3-4x better accuracy and cut LLM costs by 60% compared to basic setups. That’s not theory-it’s what Tech Ahead Corp found in real enterprise deployments in 2024. The difference isn’t just in quality. It’s in trust. Legal teams, compliance officers, and finance departments won’t use AI if they can’t verify where the answer came from.

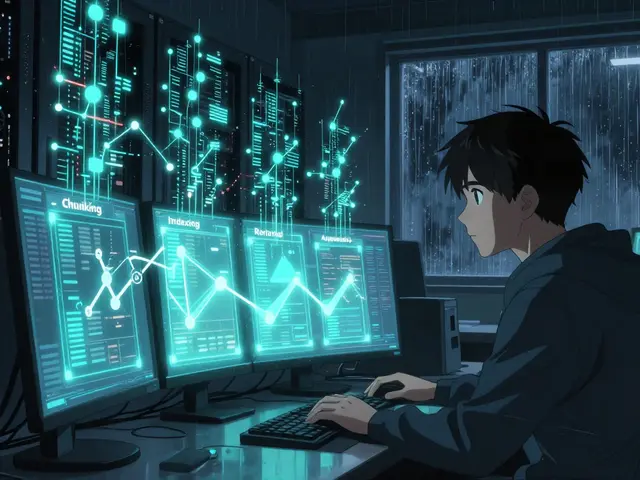

The Core Components of a Production RAG System

Enterprise RAG isn’t a single tool. It’s a pipeline made of five critical parts, each needing careful design.

- Document ingestion and chunking: You can’t throw a 50-page PDF into a model and expect good results. The system must break documents into meaningful pieces-paragraphs, sections, or even tables-while keeping context intact. OnyxData’s research shows that poor chunking leads to answers that miss key details or misinterpret relationships between data points.

- Embedding models: These turn text into numerical vectors so machines can find similar content. Cohere’s Embed v3 (2024) handles over 100 languages in one model, eliminating the need for separate encoders. This matters if your company operates globally.

- Vector database: This is where all those vectors live and get searched. PGVector (built into PostgreSQL) handles 500K embeddings under 2 seconds for mid-sized teams. For larger scale, LanceDB processes 15M rows with metadata filtering at similar speeds. The choice affects cost, speed, and scalability.

- Retrieval and re-ranking: Not all matches are equal. A simple cosine similarity search might pull in a document that mentions your topic but isn’t the best fit. Re-ranking models like Cohere’s reranker or cross-encoders re-sort results by actual relevance, not just vector distance.

- LLM generation with context: The final step. The LLM gets the query + top 3-5 retrieved snippets and writes a natural answer. The prompt must guide it to cite sources, avoid speculation, and stay within bounds.

Missing any of these? Your RAG system will break under real pressure. A startup might get by with a basic setup. A Fortune 500 company? They need all five working together.

Four Enterprise RAG Architectures (And When to Use Each)

Not all RAG systems are built the same. Your choice depends on your data structure, team size, and compliance needs.

Centralized RAG

A single retrieval pipeline feeds one LLM. All departments use the same knowledge base. Simple to build. Easy to manage.

Best for: Companies with one main source of truth-like a unified CRM or compliance handbook. Ideal if you have a small AI team and need to move fast.

Downside: If marketing needs different docs than legal, you’re stuck. Updates affect everyone. No room for customization.

Federated RAG

Each department has its own retriever-HR uses HR docs, finance uses financial reports-but they all share the same LLM. Queries are routed based on context or user role.

Best for: Large enterprises with siloed data. Legal, compliance, and sales teams all need different information, but still want consistent answers.

Downside: Takes 6-9 months to deploy. Requires strong governance to avoid duplication and inconsistency.

Cascading RAG

Start simple. If a query is basic (“What’s our PTO policy?”), use a fast, cheap retriever. If it’s complex (“Analyze Q3 revenue trends across EMEA and flag risks based on contract clauses”), escalate to a heavier, more accurate system.

Best for: Teams with budget constraints who still need high accuracy on critical queries. Reduces LLM API costs by 40-60% by avoiding heavy models for simple tasks.

Streaming RAG

Your knowledge base updates in real time. New support tickets, meeting transcripts, or regulatory filings are indexed within seconds-not hours. Vector databases like LanceDB now support continuous ingestion at high throughput.

Best for: Trading desks, customer support centers, or compliance teams where stale data equals risk. If your answer must reflect the latest SEC filing or a client’s last email, this isn’t optional.

Security and Compliance Are Non-Negotiable

Enterprise RAG isn’t just about accuracy. It’s about control.

Imagine a lawyer asks, “What’s our liability in the Berlin contract?” The system pulls a document from a shared drive. But if that document contains PII-employee names, bank details, or social security numbers-and the LLM accidentally repeats them in its answer? That’s a GDPR violation. A $20M fine waiting to happen.

That’s why top RAG systems include:

- Role-based access control: Only users with permission can retrieve certain documents.

- PII masking: Automatically redacts names, emails, and IDs before sending data to the LLM.

- Audit logs: Every query, every document retrieved, every response generated is recorded. Useful for compliance audits.

- Air-gapped deployments: For ultra-sensitive industries (defense, healthcare), the entire RAG stack runs inside private clouds with zero internet access.

Harvey AI and Azumo both report that 92% of legal and healthcare clients require these controls before even testing a RAG system. If you’re in finance, healthcare, or law, you don’t get to skip this.

Choosing Your Vector Database: PGVector vs. LanceDB

The vector database is the engine of your RAG system. Pick wrong, and you’ll pay for it in speed, cost, or scalability.

| Feature | PGVector (PostgreSQL) | LanceDB |

|---|---|---|

| Scalability | Vertical scaling only-bigger server, more RAM | Horizontal scaling-add more nodes, handle 100M+ embeddings |

| Latency (500K embeddings) | Under 2 seconds | Under 2 seconds |

| Metadata filtering | Basic, limited performance at scale | High-performance filtering by tags, dates, users |

| Deployment | Centralized, single instance | Decentralized, works with S3, Azure Blob, Google Cloud |

| Best for | Teams under 100K documents, existing PostgreSQL users | Large-scale, multi-cloud, real-time update needs |

If you’re already using PostgreSQL, PGVector is a low-friction start. But if you’re building for scale, handling billions of documents, or need to store data across cloud regions, LanceDB is the future. Harvey AI’s 2024 benchmarks show LanceDB’s ingestion speed is 3x faster than open-source versions, making it ideal for streaming RAG.

When NOT to Use RAG

Just because you can use RAG doesn’t mean you should.

Some tasks are better solved another way:

- Stylistic consistency: If you need every response to sound like your brand’s tone (e.g., marketing copy), fine-tuning the LLM is better than feeding it snippets.

- Static knowledge: If the answer is always the same (“What’s our return policy?”), hardcode it. No need to search a database every time.

- Structured classification: Is this email spam? Is this invoice valid? Use a classifier, not an LLM with RAG. It’s faster, cheaper, and more accurate.

Techment’s 2026 guide warns that 40% of early RAG projects fail because teams used it for the wrong problem. Don’t force RAG where simpler tools work better.

What’s Next for Enterprise RAG

By 2027, Gartner predicts 85% of enterprise knowledge systems will use RAG. Here’s what’s coming:

- Multi-agent RAG: Instead of one LLM, you’ll have specialized agents-one for retrieval, one for reasoning, one for validation. They debate internally before giving an answer. More accurate, harder to fool.

- Hybrid search: Combining vector search with keyword matching and semantic rules. Think Google Search meets enterprise docs.

- Multimodal RAG: Answering questions using text, images, and spreadsheets all at once. “Show me the sales trend graph from last quarter and explain why it dropped.”

- Continuous learning: Systems that auto-update their knowledge base based on user feedback. If 10 people ask for clarification on an answer, the system flags that doc for review.

These aren’t sci-fi. They’re already being piloted by companies like JPMorgan Chase, Siemens, and Deloitte.

Getting Started: Your First 30 Days

Don’t try to build the perfect system on day one. Start small.

- Pick one high-impact use case: Customer support answers? Internal policy lookup? Compliance checklists?

- Collect 50-100 relevant documents: PDFs, Word files, SharePoint pages. No need for everything.

- Use a managed service: Try Cohere’s RAG toolkit or Azure AI Search. Avoid self-hosting at first.

- Measure success: Track accuracy (do answers match the source?), latency (under 1.5 seconds?), and user satisfaction (survey users after 5 uses).

- Iterate: Fix bad chunks. Tune your prompts. Add metadata filters. Add access controls.

Companies that follow this path see ROI in under 90 days. Those trying to build everything at once? They burn out by month three.

Comments

Jasmine Oey

Okay but like… if you’re still using PGVector in 2024 you’re basically running a fax machine with a side of hope. LanceDB is the future and if you’re not streaming your docs in real time you’re just feeding your LLM ancient history. I once saw a compliance officer get fired because the system pulled a 2021 policy. 2021. Can you believe it? We’re talking about legal liability here, people. Not a TikTok trend.

January 17, 2026 AT 08:15

Marissa Martin

I get that RAG is important… but I just wish companies would stop treating LLMs like magic boxes. They’re not oracles. They’re fancy autocomplete with a side of hallucination. And if your HR department doesn’t know how to format their PDFs properly, no amount of vector databases will save you.

January 18, 2026 AT 03:57

James Winter

US companies overcomplicate everything. Just use one system. One database. One model. Stop with the federated nonsense. Canada does it right-simple, fast, no drama.

January 19, 2026 AT 23:40

Aimee Quenneville

So… you’re telling me the solution to ‘my AI is dumb’ is… more tech? Wow. Just wow. I’m sitting here with my 50-page PDF that’s just a screenshot of a Slack thread from 2022, and you’re talking about LanceDB and multimodal RAG like it’s a spa day. Can we just… fix the data first? Like… maybe don’t let your intern upload stuff into the system?

January 21, 2026 AT 06:27

Cynthia Lamont

Let me just say this: if your RAG system doesn’t have PII masking, you’re not just sloppy-you’re negligent. And if you think ‘basic metadata filtering’ is enough for a Fortune 500, you’ve clearly never been audited. I’ve seen companies lose millions because someone forgot to redact a social. It’s not a bug. It’s a crime. And yes, I’m reporting this to your legal team. You’re welcome.

January 21, 2026 AT 21:37

Kirk Doherty

Multi-agent RAG sounds cool but I’d rather have one smart system than five arguing bots. Also PGVector works fine for me.

January 22, 2026 AT 10:50

Dmitriy Fedoseff

Technology is never the problem. It’s the human assumption that automation replaces responsibility. You build a RAG system to make decisions easier, not to outsource ethics. When a lawyer asks for a contract analysis and gets an answer pulled from a 2020 draft because the system didn’t update… who’s accountable? The model? The engineer? Or the manager who said ‘just ship it’? We’re not building tools. We’re building trust. And trust, unlike vectors, can’t be indexed.

January 24, 2026 AT 02:36