Author: Mark Chomiczewski - Page 2

- Mark Chomiczewski

- Feb, 16 2026

- 10 Comments

Model Access Controls: Who Can Use Which LLMs and Why

Model access controls define who can use which large language models and under what conditions. Learn how RBAC, CBAC, and output filtering prevent data leaks, ensure compliance, and balance security with usability in enterprise AI deployments.

- Mark Chomiczewski

- Feb, 11 2026

- 9 Comments

Retrieval-Augmented Generation for Large Language Models: An End-to-End Guide

RAG lets large language models use your real-time data instead of outdated training info. It cuts hallucinations, saves money, and builds trust. Here’s how it works, what tools to use, and where it shines - or fails.

- Mark Chomiczewski

- Feb, 8 2026

- 9 Comments

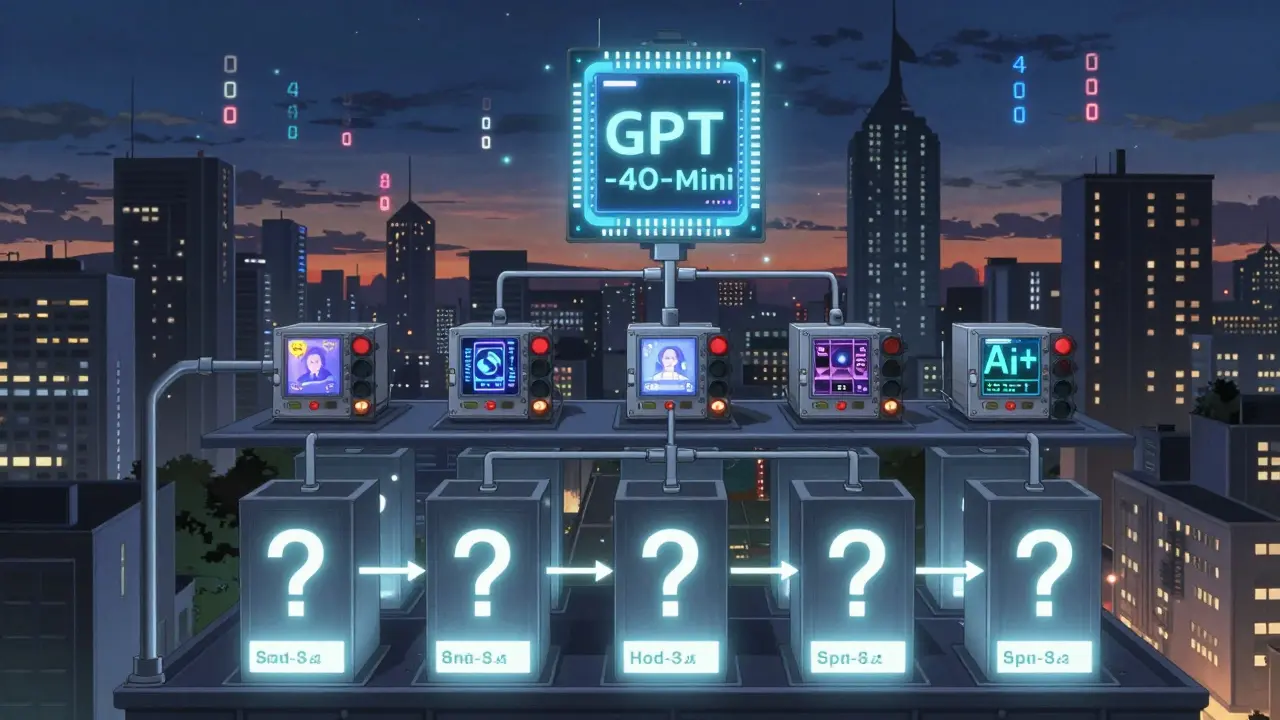

Architecture Decisions That Reduce LLM Bills Without Sacrificing Quality

Learn how smart architecture-not cheaper models-can cut LLM costs by 30-80% without sacrificing quality. Real techniques used by top companies today.

- Mark Chomiczewski

- Feb, 7 2026

- 7 Comments

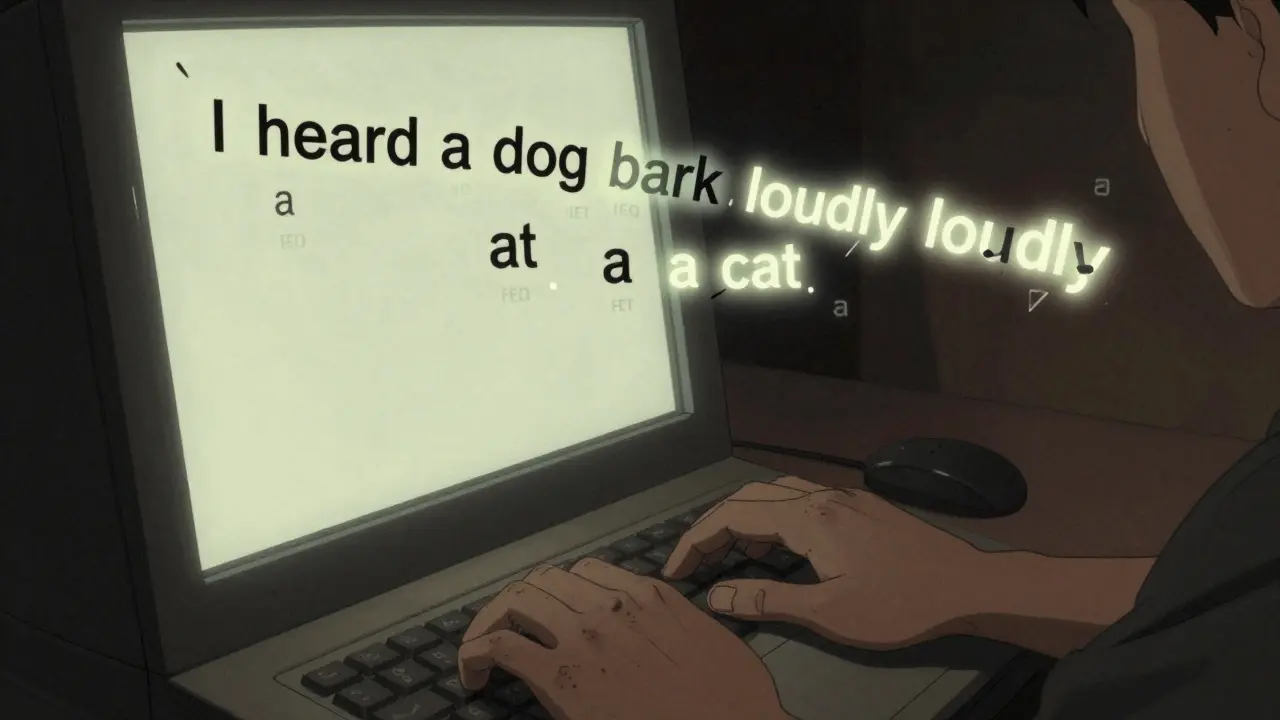

Tokens and Vocabulary in Large Language Models: How Text Becomes Computation

Tokens are the building blocks that let AI understand human language. Learn how subword tokenization works, why vocabulary size matters, and how token count impacts cost, speed, and accuracy in real-world LLM use.

- Mark Chomiczewski

- Feb, 6 2026

- 7 Comments

Prevent OOM Errors in LLM Inference: Memory Planning Techniques for 2026

Learn how to prevent Out-of-Memory errors in large language model inference using modern memory planning techniques like CAMELoT and Dynamic Memory Sparsification. Deploy larger models on existing hardware without costly upgrades.

- Mark Chomiczewski

- Feb, 5 2026

- 7 Comments

LLM Governance Policies: Data Safety and Compliance Guide for 2026

Understand how LLM governance policies balance innovation and safety in 2026. Learn data handling, risk management, and compliance steps for government and business use. Real-world examples and future trends included.

- Mark Chomiczewski

- Feb, 4 2026

- 8 Comments

Instruction Tuning for LLMs: How to Build Models That Follow Instructions Better

Instruction tuning improves large language models to follow user instructions accurately. Learn how it works, its benefits like reduced hallucinations, implementation steps, and future trends in AI development.

- Mark Chomiczewski

- Feb, 3 2026

- 6 Comments

Evaluation Datasets for Large Language Model Agent Benchmarks: What Works, What Doesn’t, and What’s Next

Evaluation datasets for LLM agents reveal hidden weaknesses in reasoning, safety, and real-world performance. Learn which benchmarks still work, which are broken, and how to build a reliable evaluation strategy.

- Mark Chomiczewski

- Feb, 2 2026

- 5 Comments

Self-Supervised Learning for Generative AI: How Models Learn from Unlabeled Data

Self-supervised learning powers today's top generative AI models by learning from unlabeled data. Discover how SSL works, its real-world uses, costs, and why it's replacing traditional supervised methods in AI development.

- Mark Chomiczewski

- Jan, 31 2026

- 9 Comments

Privacy and Data Governance for Generative AI: Protecting Sensitive Information at Scale

Generative AI is exposing sensitive data at scale-but the solution isn't to block it. Learn how to build real data governance that protects privacy, meets global regulations, and keeps employees productive.

- Mark Chomiczewski

- Jan, 30 2026

- 8 Comments

Prompt Injection Risks in Large Language Models: How Attacks Work and How to Stop Them

Prompt injection attacks trick large language models into ignoring their instructions using clever text inputs. Learn how these attacks work, real-world examples, and practical defenses to protect your AI systems.

- Mark Chomiczewski

- Jan, 26 2026

- 10 Comments

Future Trajectories and Emerging Trends in AI-Assisted Development in 2026

By 2026, AI-assisted development has transformed from a productivity tool into the core of software engineering. Learn how specialized AI models, autonomous agents, edge computing, and regulatory changes are reshaping how code is written-and what it means for developers.