Category: Artificial Intelligence

- Mark Chomiczewski

- Mar, 2 2026

- 0 Comments

Hybrid Recurrent-Transformer Designs: Do They Help Large Language Models?

Hybrid recurrent-transformer designs combine the efficiency of Mamba with the reasoning power of attention to solve long-context bottlenecks in large language models. They're already powering production systems like Hunyuan-TurboS and AMD-HybridLM.

- Mark Chomiczewski

- Feb, 28 2026

- 5 Comments

Transfer Learning in NLP: How Pretraining Made Large Language Models Possible

Transfer learning in NLP lets models learn language from massive text datasets, then adapt to specific tasks with minimal data. This approach made powerful AI accessible to everyone - not just tech giants.

- Mark Chomiczewski

- Feb, 27 2026

- 3 Comments

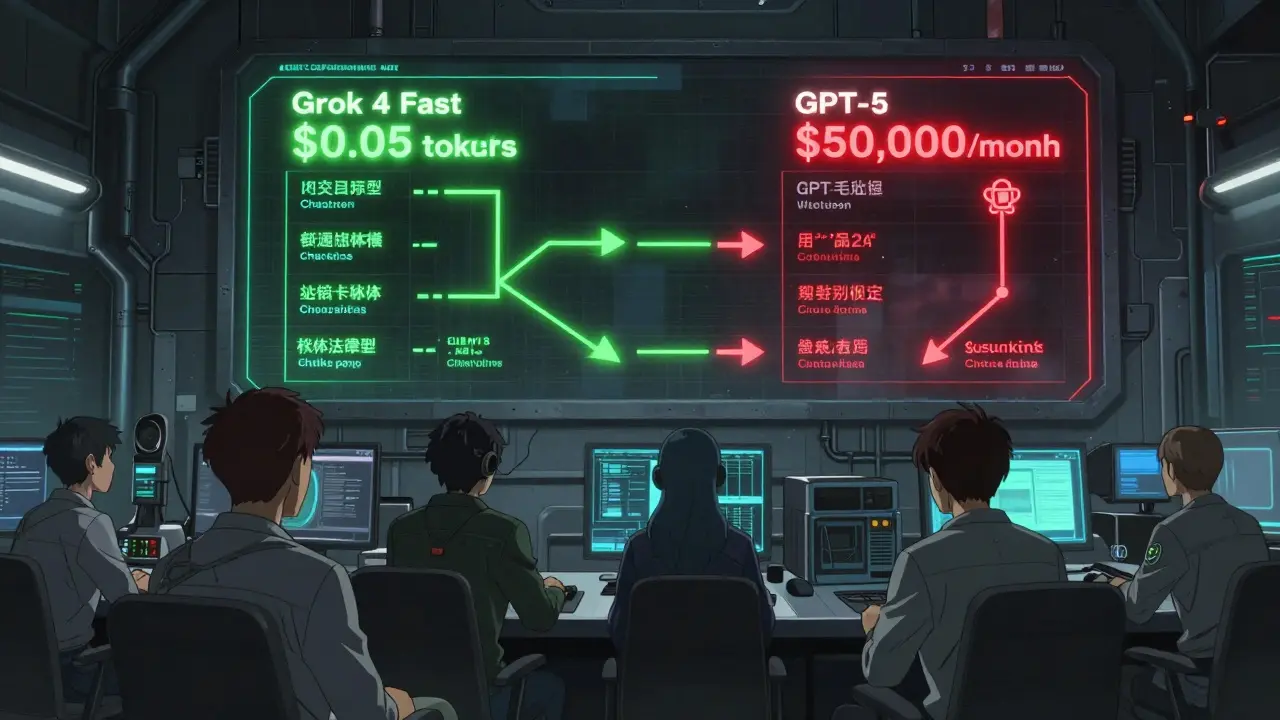

Cost-Quality Frontiers: How to Pick the Best Large Language Model for Maximum ROI

Learn how to pick the best large language model for your business by balancing cost and quality. Discover which models deliver maximum ROI in 2026 and where to use them.

- Mark Chomiczewski

- Feb, 26 2026

- 3 Comments

Guardrails for Large Language Models: How to Design and Enforce AI Safety Policies

Learn how enterprise-grade guardrails for large language models are designed, enforced, and audited to ensure safety, compliance, and reliability in real-world AI systems as of 2026.

- Mark Chomiczewski

- Feb, 25 2026

- 6 Comments

Email and CRM Automation with Large Language Models: Personalization at Scale

LLM-powered email and CRM automation is transforming how businesses handle customer communication. With real-world results like 80% fewer tickets and 64% lower costs, companies are moving beyond templates to true personalization at scale.

- Mark Chomiczewski

- Feb, 24 2026

- 4 Comments

Unit Economics of Large Language Model Features: Pricing by Task Type

Learn how LLM pricing works by task type, from input/output token costs to thinking tokens and budget models. Discover real-world strategies to cut AI expenses by up to 70% in 2026.

- Mark Chomiczewski

- Feb, 22 2026

- 6 Comments

Employment Law and Generative AI: Monitoring, Productivity Tools, and Worker Rights in 2026

By 2026, AI tools used in hiring, monitoring, and performance evaluations are legally regulated across key U.S. states. Employers must now disclose AI use, audit for bias, and give workers rights to review and appeal algorithmic decisions.

- Mark Chomiczewski

- Feb, 21 2026

- 6 Comments

Inclusive Prompt Design for Diverse Users of Large Language Models

Inclusive prompt design ensures large language models work for everyone - not just native English speakers or tech-savvy users. Learn how this approach boosts accuracy, reduces frustration, and opens AI to millions who were previously excluded.

- Mark Chomiczewski

- Feb, 20 2026

- 7 Comments

The Future of Generative AI: Agentic Systems, Lower Costs, and Better Grounding

Generative AI is evolving into autonomous agents that plan, act, and learn. With costs falling and grounding improving, companies that adopt these systems now will lead the next wave of efficiency and innovation.

- Mark Chomiczewski

- Feb, 19 2026

- 9 Comments

Liability Considerations for Generative AI: Vendor, User, and Platform Responsibilities

In 2026, generative AI liability is no longer theoretical. Vendors, platforms, and users all face real legal risks-from copyright lawsuits to discrimination claims. Here’s what you need to know to avoid liability.

- Mark Chomiczewski

- Feb, 18 2026

- 7 Comments

How Generative AI, Blockchain, and Cryptography Are Together Redefining Digital Trust

Generative AI, blockchain, and cryptography are merging to create systems that prove AI outputs are authentic, private, and untampered. Real-world use cases in healthcare, finance, and supply chains are already cutting fraud and boosting trust.

- Mark Chomiczewski

- Feb, 17 2026

- 9 Comments

Data Curation for Generative AI: How to Build Bias-Free Training Datasets

Building high-quality training data for generative AI requires careful curation to avoid bias, noise, and inaccuracies. Learn how to clean, filter, and augment datasets to build fair, reliable models.